In the fast-paced world of software development, there’s constant pressure to deliver quickly, deploy often, and respond instantly. Amid this rush, testing can sometimes become a casualty of speed. But in the quest for agility, teams often forget a fundamental truth: quality takes time. A slow but sure approach to testing isn’t about dragging your feet — it’s about being deliberate, thoughtful, and methodical to ensure every release stands strong.

Agile isn’t about haste — it’s about responding to change with confidence. And confidence comes from knowing your product has been tested deeply and wisely.

Why Slow Can Be Smart

- Quality Over Quantity

Speedy testing can miss the fine cracks — corner cases, performance anomalies, security loopholes. A slow but sure approach means giving attention to each requirement, reviewing each test case with care, and executing tests with precision. The result? A robust, reliable product with fewer post-release surprises.

- Prevention vs. Cure

It’s far more costly and time-consuming to fix bugs after release than during development. Taking time during testing to uncover and understand issues helps reduce technical debt and prevent production disasters. It’s the classic case of “measure twice, cut once.”

- Better Test Design

Slow testing doesn’t mean repetitive manual execution — it means thoughtful planning. It means taking time to:

- Write clear, reusable test cases.

- Automate where it makes sense.

- Define meaningful assertions.

- Anticipate user behaviour and edge cases.

This upfront investment improves test coverage and makes regression testing smoother and faster in the long run.

Where “Slow but Sure” Makes the Most Impact

- Exploratory Testing

Instead of racing through scripted checks, testers can explore the application intuitively, discovering bugs automation might miss. It takes time, curiosity, and patience — but yields invaluable insights.

- Test Automation

Automation is often rushed, resulting in flaky, hard-to-maintain scripts. Slowing down to build a stable test framework, add meaningful waits, use modular design, and review automation logic leads to more sustainable results.

Test-Fast: Fast to trust, not just fast to run.

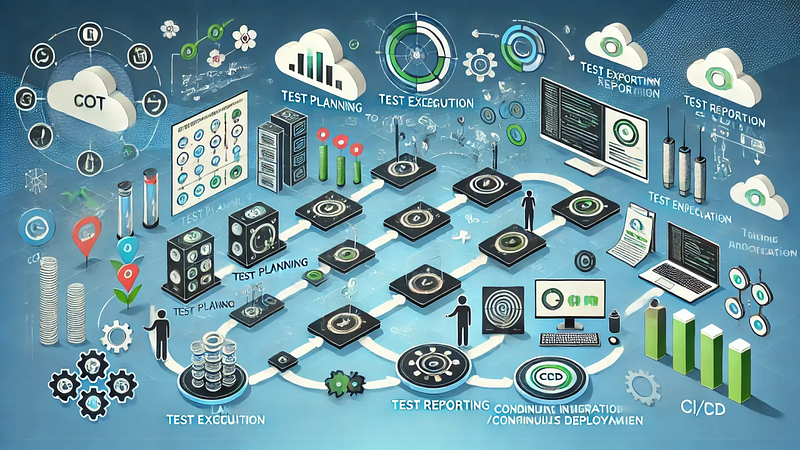

- CI/CD Integration

A mature testing pipeline takes time to set up. Taking the slower route — configuring quality gates, setting up smoke tests, implementing canary releases — pays off with smoother releases and fewer rollbacks.

The Myth of “Slow Means Inefficient”

Taking a slow but sure approach doesn’t mean delaying delivery — it means avoiding rework, burnout, and firefighting later. It means embracing:

- Patience in planning.

- Deliberation in design.

- Confidence in coverage.

In fact, slow and sure testing aligns perfectly with agile principles. Agile isn’t about haste — it’s about responding to change with confidence. And confidence comes from knowing your product has been tested deeply and wisely.

Balancing Speed and Stability

Of course, deadlines exist. Releases must go out. So how do we balance “slow but sure” with the need for speed?

- Shift Left: Start testing early and involve testers in design discussions.

- Risk-Based Testing: Focus deeply on high-risk areas rather than testing everything equally.

- Automate Wisely: Automate the repeatable, so humans can focus on what really needs critical thinking.

- Build Quality Culture: Encourage developers to test better, write clean code, and own quality collectively.

Test-Fast: Because fast starts with thoughtful.

Conclusion

In software testing, “fast” is often a false friend. While velocity is important, reliability is essential. By embracing a slow but sure approach, testers don’t just find bugs — they build trust. They lay the foundation for scalable, secure, and successful products. So next time you’re asked to hurry testing, remember: slow is smooth, and smooth is fast.

Ready to test smart? Take your time. Do it right. Deliver with confidence.